©Copyright 2018 GEOSCIENCE RESEARCH INSTITUTE

11060 Campus Street • Loma Linda, California 92350 • 909-558-4548

MAN: CREATURE AND EXPLORER

by

J. Mailen Kootsey

Assistant Adjunct Professor of Physiology

Department of Physiology and Pharmacology

Duke University Medical Center,

Durham, North Carolina

Is your brain the same as a computer? What is the hope of reality being fully understood? How does creativity fit into the scheme of physical laws? In answer to these questions, the author raises some thought-provoking ideas.

INTRODUCTION

As a person who is engaged in the study of life and who also believes in a God who reveals Himself as the sole source of life, I am faced with the following dilemma: How is it possible to believe that life can come only from one source and yet work every day as though life may be so common that its last mystery may evaporate at any moment? Is it necessary to split work and curiosity from philosophy and isolate them into separate compartments, or is it possible to weld them into one coordinated whole? Many even the majority of scientists have solved this problem in their minds by rejecting the belief in a creator or by watering down His position and function to a level compatible with their supposed understanding of nature. Those who persist in retaining both belief and curiosity are often regarded as somewhat schizoid.

Man's own nature often becomes the central issue in this controversy. Man is at once a mysterious creature with free will, creativity and capacity for abstract thought and at the same time is a part of the living world he studies. The dual role of man as investigator and subject confuses the issue further by adding powerful emotions. How can you be scientific and objective when discussing free will in an orderly universe if you begin by assuming that you have free will and would be loath to admit otherwise!

Scientific approaches to the study of life [1] have ranged between two extremes. In the mechanistic view, everything including living matter ultimately reduces to basic laws of physics and chemistry that can be unfolded in the laboratory. The vitalist, on the other hand, draws a sharp distinction between the organic and inorganic with the former containing something different in principle. Vitalism, as an understanding of the essence of life, appeals to the human ego because it makes living matter much more special than inorganic matter; it allows the possibility that man may be different from animals in quality and not just in quantity. On the other hand, vitalism, by definition, puts the essence of life beyond the reach of science and thus is hardly a suitable companion philosophy for scientific curiosity.

The mechanistic view of life seems much more appropriate for a society that is so thoroughly committed to scientific explanation. Our society expects logical scientific understanding on every subject from our origins to the extension of our life. However, mechanistic explanations of life were resisted from the first because they point to the logical conclusion that man is also a machine, fully explainable by the laws of physics and chemistry and therefore not the unique and mysterious creature that he imagines himself to be. J. Müller [2], Claude Bernard [3] and others first suggested a century or more ago that the inner workings of the human body could be understood by the methods of science, but we are just now getting accustomed to the idea. Down deep inside we don't really want to be reduced to equations and tables and analyzed like machines.

So we are faced with the questions: Must the dignity and mystery of life disappear as we confidently go on prying loose nature's secrets and how do we reconcile this curiosity with our belief in a creator God?

COMPLETENESS

First, I would like to consider man's capability for understanding the natural world. What is the likelihood of finding the ultimate set of natural laws, a set that could account for every fact to be discovered in nature?

The most obvious limitation to the study of the natural world is our very restricted set of facts, but there is a more fundamental limitation. To understand it we have to consider the methods of study [4]. The process starts with the compilation of facts. It is only a start, though, because facts pile up too rapidly and of themselves provide no means of making predictions about the future. The next step is to assemble a list of general statements which might summarize all the facts, along with a grammar for combining and relating the general statements. One then asks for each fact: Does it follow from the general statements alone and in combination according to the chosen grammar? If the answer is "yes," one can go on to the next fact. If "no," a change has to be made in the general statements or grammar, and the testing process starts all over again. Together, the general statements and the grammar form a theory.

It does not take much experience in theory building to see that a lot of time can be saved by substituting symbols for facts and grammatical rules. Once this has been done, it can be seen that theories in many areas of science have basically the same form. Much duplicated effort can then be saved by studying symbol manipulation by itself, independent of any specific facts; this study is the world of mathematics. It was in the middle of the 19th century that George Boole and other mathematicians began to set down the formal rules of logic used in the process of deduction going from general statements to a specific conclusion.

About the turn of the century, mathematicians addressed themselves to an important and fundamental question: Given a theory, is it always possible to decide whether a statement is or is not valid according to the theory [5], [6]? In 1919, Emil Post showed in his thesis that a certain simple theory of numbers is complete, meaning that there is a guaranteed process which will affirm or deny the validity of any statement in the language of the theory. In 1930 Kurt Gödel was able to prove the same thing for a whole class of theories. Mathematicians were hopeful that time would bring similar proofs for all classes of theories. Thus it was a surprise when, in another paper just one year later, Gödel showed that a broad and important class of theories was incomplete. Even though such a theory might be internally consistent, one cannot be sure of finding out whether a statement is true or false according to the theory.

Applied to a specific area of science, this result means that we can never say that we have arrived at the final set of laws. Not only are we limited by a small sample of facts, but as we go on finding new ones we cannot always be sure of knowing whether the new fact requires a modification of theory or not. Scientists are not discouraged by this limitation, however. We proceed on confidently, either using theories known to be complete or we hope not to be faced with the unprovable statement. Even with something as familiar as gravity, we have to settle for the confidence gained from long experience. To be completely honest, though, we have to admit that any day or any second might bring the fact which destroys our cherished "law."

To illustrate this pragmatic approach to the understanding of nature, let us consider the properties of machines. There is a common idea that machines are "little pieces of nature" which are completely understood by man and therefore can be manipulated by him at will. The fear of mechanistic ideas of life is really the fear that sooner or later man himself will be put in the same category as the machine. On closer inspection, however, machines are not quite this simple. The "machine" really exists only as a concept in our mind; what we see and touch is an object built from our limited knowledge of nature to correspond to that concept as closely as possible. For example, take the common playground see-saw. In concept, the see-saw "machine" is a perfectly balanced lever resting on a frictionless pivot. However, in real life we are happy with an unbalanced board that may bend and crack and probably has slivers, a squeaky and rusty pivot, etc. We tolerate these differences between the concept and reality because our theories can't account for all of them and because our bodies can quickly learn to adapt to them. To be sure, some machines come a lot closer to their conceptual counterpart than a playground see-saw, but the difference is in degree, not in kind.

BRAINS AND COMPUTERS

The subject of the brain springs to mind immediately when considering the limits of the study of life. Most of us might be willing to have our digestive organs or even our senses reduced to the level of machines, but there is one part that we definitely do not want to have oversimplified and manipulated: the human nervous system. Closely related to the subject of the brain is the development of computers. It is practically a truism of our day that the influence of computers is growing and that they are a threat to human individualism and freedom. I am going to pass over that tempting point and concentrate on a more fundamental question: How have the many advances in computer hardware and program structure and the increased knowledge of the nervous system altered our understanding of the human mind? Are they edging us closer to regarding the brain as a machine and therefore completely predictable and devoid of free will?

Some scientists definitely think so. One has put it in the form of a book title The Machinery of the Brain [7] with the book's cover showing the inner workings of an ancient pocket watch superimposed on a man's head. The logic employed to arrive at such a conclusion is roughly as follows: computers and brains are alike in many ways. Both computers and brains are information processing devices, gathering information from sensors or senses, operating on it, and producing output ranging from printed text to glandular secretions. Computers and brains are constructed alike they are both electrical in nature and both are made up of a complex array of interconnected small logic elements. Computers can be programmed to do many things humans do that fall under the category of "thinking," such as pattern recognition, language translation, problem solving, etc. Nervous systems, especially of some insects and animals, have been observed under some circumstances to show automatic or preprogrammed characteristics. Computers are built on a structure of strict logic and produce only predictable results. Since brains and computers are so much alike, it is likely the mind operates on a similar basis except it has many more components to work with than we are presently able to put together in one computer.

Let us now examine each of these ideas in greater detail. It is true that the brain has the characteristics of an information processing device such as the modern digital computer. This, however, is a very broad and general statement and implies little more than the relationship of cause and effect. It should not be construed as implying anything similar about the nature of the processing [8]. Getting across the Atlantic can be accomplished either by boat or plane, but that does not imply that boats and planes are alike in principle, construction, or limitations. Even the breakdown of the nervous system into input devices, a processor, and output devices is now known to be an oversimplification. The eye was once compared to a camera that simply relayed to the brain moving pictures of the outside world. Now the eye itself is known to be an information processor; it sends on to the brain only selected and biased information. The frog's eye [9] reports to its brain only certain details of what it sees primarily the presence of small moving shapes (insects for food) and large shapes (possible predators). Information selected by the human eye is not as specific; cells are grouped together to detect such general characteristics as edges and movement.

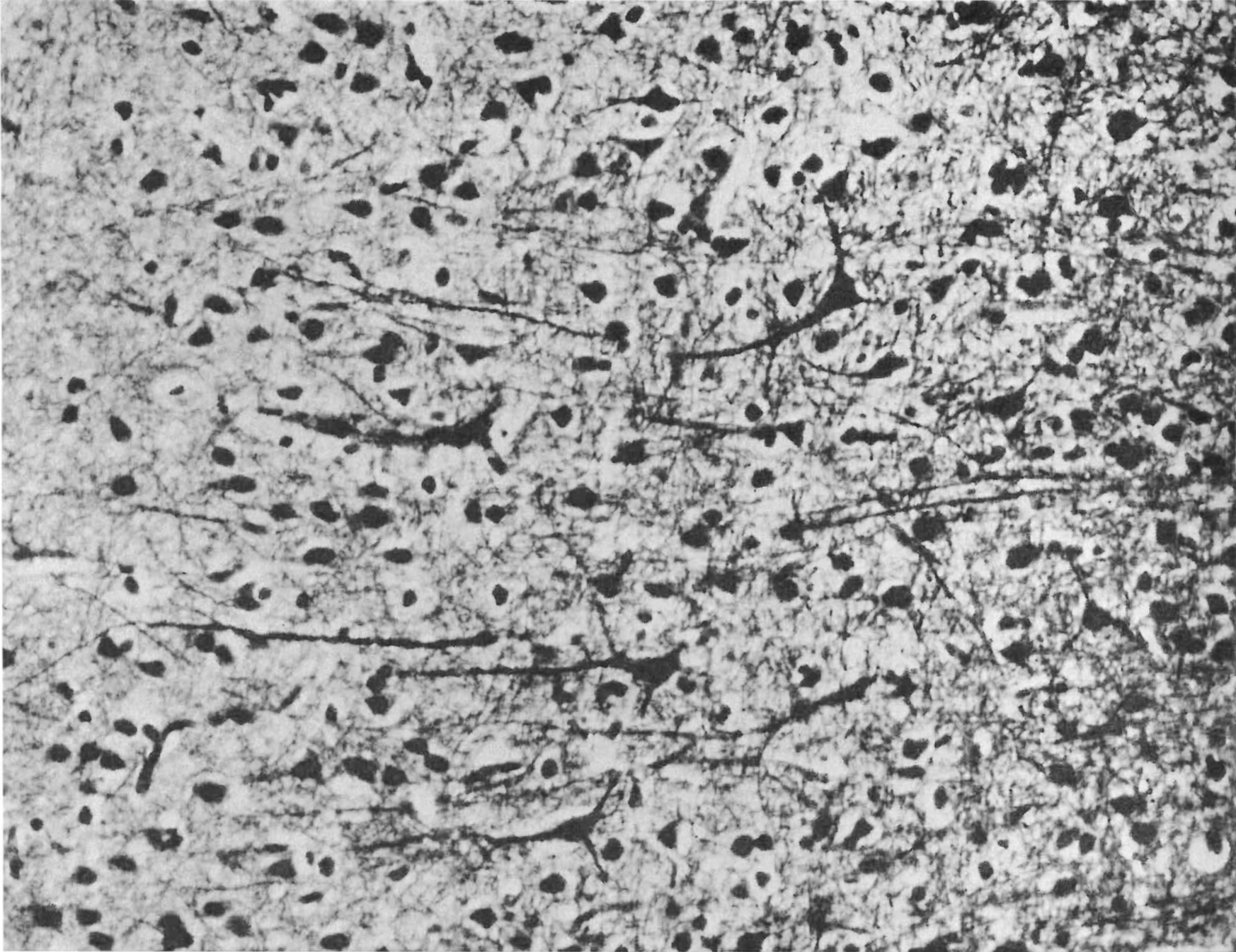

It also appears true that the brain is essentially electrical in nature with its "circuitry" broken down into smaller elements called neurons [10]. One could even extend the analogy further and point out that neurons have a certain threshold or discreteness property producing states comparable to the "true" and "false" of digital logic. There the similarity ends. Neurons differ from logic elements both in their input-output relationships as well as in the number of interconnections with other neurons (Figure 1) averaging 100 to 1000 per neuron in the brain versus 10 or less per gate in a computer. While digital logic elements operate according to a strict mathematical formalism, we know virtually nothing about the language of the brain. Only a little is known about the input-output relationship of neurons, and we know of no system such as Boolean algebra to help us make use of their properties. The brain is basically a parallel device while most digital computers are serial in operation. It has been estimated that the brain can handle 100,000 or more messages simultaneously while the largest computers can handle less than 100. (Many computers appear to be capable of more by switching rapidly from one job to another.)

The next statement about computers and brains in essence compares their behavior: Is it true that computers are now or soon will be able to imitate human thinking? In 1950, Turing [11] described what is now accepted as a minimum test for a machine to pass if its activity is to be called thinking. The test begins as a game with three people a man and a woman in one room and an interrogator in another room. The interrogator is allowed to ask questions of the man and woman (whom he knows only by neutral identification, say the letters A and B) and they answer his questions. The object of the game for the interrogator is to figure out which is the man and which is the woman; it is the goal of the other two to try and make the interrogator form the wrong identification. So that voice tones don't give the answer away, the questions and answers are passed back and forth by teleprinter. Now, Turing says, suppose we substitute a computer for the man or for the woman. If the computer is just as successful as the man or woman at fooling the interrogator, then we might say that the machine was capable of "thinking."

Of course no computer has come close to passing the Turing test. The immediate goals for computer behavior have been much more modest: game playing, language translating, problem solving, and pattern recognition. Dreyfus [12] has pointed out, in a book entitled What Computers Can't Do, that in each of these areas, the pattern has been the same: early dramatic success followed in a few years by unexpected difficulties.

Machine translation, for example, began in the early 1950's with the production of the first mechanical dictionary by Oettinger in 1954. There was encouraging success at first, getting together enough memory to hold a reasonable-sized dictionary and writing programs that replaced each word with its translated counterpart. The resulting text was rough and unpleasant and in many cases simply unreadable or misleading. It was finally recognized that translation involved much more than substitution for words or phrases. The phrase "out of sight, out of mind" might, for example, turn into the equivalent of "invisible idiot"! The problem is that words and phrases frequently have more than one meaning and the human translator selects the most likely one based on the context and on his past experience. In essence what needs to be done, then, is to give the computer not only a dictionary but the cumulation of experience in the real world with objects and concepts with which the human translator comes equipped. In 1966, a report from the National Academy of Sciences National Research Council concluded that machine-aided translation was worthwhile, but that there was "no immediate or predictable prospect of useful machine translation."

Each of the other areas has encountered similar fundamental problems. In game playing, it rapidly became obvious that only a few games like tic-tac-toe and Nim could be played (and won) by machines following rules covering all possibilities. In other more interesting games like chess, the total number of possible moves to be investigated mounts up too fast even for a computer. In planning a move in chess, if the computer was to consider each of the 30 possible moves of each player and look 2 moves ahead, it would have to consider 810,000 combinations. To look 10 moves ahead (about one fourth the length of an average game) would require inspection of 3×1029 possibilities. At the rate of one million per second, this would take 1015 years and that for just the first move! The human player obviously uses shortcuts called heuristic methods, but it is not clear what the methods are or how they are found.

In problem solving, the impasse is the computer's need to have the problem defined explicitly, while the human is able to recognize (by methods unknown) the essential elements of a problem.

We might summarize all these difficulties by saying that the human works well with a large array of ill-defined facts and in situations of considerable uncertainty while the computer must have both facts and rules defined clearly.

Some remarkable examples of automatic behavior triggered by signals from the environment have been observed in insects and animals. Consider the little Sphex wasp [13] that lays its eggs in the paralyzed body of a cricket which it catches and buries in a hole in the ground. The wasp has a very definite routine which it follows in burying the cricket: it brings the cricket to a prepared hole, lays it down and goes into the hole for a last inspection. It then comes out and carries the cricket in. If this routine is interrupted, say by moving the cricket away while the wasp is in the hole, the entire inspection routine is repeated. The wasp can be interrupted over and over again with the same result; it apparently never tires of the repetition nor does it think of omitting the inspection.

Other observations frequently quoted as evidence of automatic behavior are the brain stimulation experiments in rats and other animals. An electrode is inserted into a specified region of the brain. Electrical stimulation then produces a well-defined behavior pattern such as anger, fear, or satisfaction. A rat given the opportunity to stimulate itself by pressing a bar [14] will (if the electrode is properly placed) repeatedly press the bar at a high rate ignoring fatigue, hunger, and thirst until it simply drops from exhaustion! These experiments indicate that there is probably an area in the brain responsible for each of these emotions, but they are not proof that the emotions are automatically triggered by external stimuli.

I submit that it is not appropriate to extrapolate from these observations on wasps and rats to the human brain and to state that human behavior is also programmed and automatic only more complex. Some parts of the human nervous system are obviously automatic like the reflex which removes a hand quickly from a hot stove and perhaps we are governed by external stimuli to a greater degree than we would like to admit, but it does not follow that everything humans do is the result of preprogramming!

On the basis of the foregoing arguments, I conclude that there is no reason to believe that scientists will, in the near future, be able to write down a set of equations or flow chart an algorithm governing the operation of the human brain. While its study is interesting and may result in some useful aids to human thought processes, extrapolating from present progress to the final result is like the man who, after climbing to the top of the tallest tree, announces that he is on his way to the moon!

LAW PLUS CREATIVITY

It might well be argued that nature is governed by a set of fixed laws, but that man simply has little or no hope of finding the right set. All of science is based on this assumption because scientists begin by presuming nature to be regular and repeatable. The question then is: Would nature be dull and uninteresting if we did find a complete set of laws? Is the ultimate goal of science simply the determination of basic laws? I submit there is more to nature than just basic law. Before I am accused of supporting vitalism, let me give some examples and explain what I mean.

Let us suppose you are learning to play a game one that does not depend on a random event like the roll of dice and one that does not require physical prowess. First you learn the rules of play and the object of the game. Would you say at that point you have the game mastered and that it would be uninteresting to proceed further? Of course not; the real interesting part of the game the strategy and response to the opponent begins after the rules are learned.

Or suppose you want to be a writer. You begin by learning the rules of grammar and syntax and possibly how to organize your material. But this is just a beginning. You will also need some other abilities that are not quite so easily defined and taught how to select an interesting topic, how to choose words with skill and imagination and weave them together artfully. You aren't an architect just because you know how to calculate the load on a support column and how thick to make the insulation. It takes something more to turn heaps of material into a structure that both fulfills a need and is pleasing to the eye.

In each of these examples, rules play an essential part. They provide the regularity and set the limits, but they are not the end of understanding only the beginning. The interesting part is what can be done within the rules something, I think, well described by the word "creativity." The game player is creative if he can develop a strategy that ensures the object of the game while obeying the rules. The writer is creative if he can communicate an idea successfully without alienating the reader by breaking the rules of grammar. The architect is creative if he can produce a design which is functional and esthetically interesting and which doesn't collapse under load.

What we see in the natural world can be thought of in the same terms: subject to basic laws and regularity, yes, but even if we did know those laws precisely, we still wouldn't have conquered and "explained" the natural world. Within the laws there is latitude for creativity beyond man's wildest imagination, latitude wide enough to include the complex processes of life as well as the simpler structures of the inorganic world. It is not necessary to look beyond the fundamental interactions of physics and chemistry for an explanation of life, because we have no idea of the limits to what can be accomplished using these building blocks. There is at present no shred of evidence that organic structures are subject to different laws than inorganic. There is only our skepticism that such complicated processes can come from such simple building blocks. Insofar as I can see, the main reason we have for this skepticism is the difficulty we have in being creative within these same limits and the difficulty we have in trying to figure out how Someone with more ability than ourselves has worked within the same rules.

Up to now, science has concentrated to a large extent on finding the fundamental rules in nature. I see us now undergoing a change in emphasis to a broader and much more complex study: how the fundamental building blocks and laws of nature are used to make up the structures that we see, both organic and inorganic. The study of chemistry and bulk materials is a beginning, but we are challenged even more by the complex structures and interactions in the living world.

Shortcomings in our methods cause the difficulty in finding out how fundamental laws are used in complex structures. The only way we know is to build up a model of the complex structure using only the fundamental laws and our idea of how it is built and then see if the model behaves in the same way as the real thing. Model-making is usually accomplished with mathematics rather than with real atoms, because the mathematics itself doesn't add more uncertainty to the model. With all due respect to mathematicians, the tools they provide are only barely adequate to begin the job of understanding natural structures, even with the aid of powerful computers. I do wish the mathematicians success in developing more powerful methods, because unless some other method of study is found, our success in understanding how nature's basic rules are utilized depends on them!

PURPOSE

I would now like to return to the analogy of the game player. We have discussed the rules and the strategy of play, but what about the object of the game? We have seen the difficulty man has in deciphering the laws of nature and how those laws are used. What are man's chances of at least figuring out the object of the game?

Among the many authors that have written on the meaning and definition of life, there is quite general agreement that one of the characteristics of life is "purposiveness," i.e., individuals and even organs and smaller structures all seem to be constructed with a purpose or goal in mind. The human body, for example, depends for its very life on the oxygen intake and carbon dioxide eliminating functions of the lungs. The lungs, therefore, have a clear and necessary purpose in ensuring the well-being and survival of the body and they are constructed to accomplish this job most effectively.

When it comes to deciphering the purpose of an individual, however, we encounter evidence for two distinctive and quite opposite points of view. The view that is by far the more popular is that each individual whether amoeba or man has as its first and foremost goal self-survival. According to this viewpoint, every characteristic of an individual shape and coloration, means of obtaining food, method of defense, social habits, etc. are all geared toward survival of the individual at the expense of competitors.

There is also evidence for the contrary point of view, that the ultimate purpose of every individual lies in making its contribution to an overall pattern in nature. Those who hold this view argue that there really is no such thing as an isolated individual; everything depends on everything else and each, in contributing to the general welfare, ultimately assures its own well-being.

Supporters of both of these views can cite substantial evidence in nature, so what do we do?

I think this is precisely the situation Ellen White had in mind when she wrote that "to man's unaided reason, nature's teaching cannot but be contradictory and disappointing. Only in the light of revelation can it be read aright" [15]. So once again man finds a limitation. Even the object of the game in which he finds himself both participant and observer eludes him. But for this all-important question, man is provided with an answer directly from the One who made the rules and who works so masterfully and creatively within them. Through His revelation, in person and in word, we learn that the ultimate result of undivided self-interest is self-destruction and that the evidence for self-survival as a goal in nature is real, but is transient and will soon be eliminated.

GOD IN NATURE

God's revelation of Himself to us is quite clear on one point: that He is the originator and the source in nature. But He also reveals Himself as being continually involved with His creation, and here we run into some difficulty. How do we understand His involvement in terms that are compatible with our scientific efforts toward deciphering nature? To me, the most tantalizing statements on this subject are the following: "But the power of God is still exercised in upholding the objects of His creation. It is not because the mechanism once set in motion continues to act by its own inherent energy that the pulse beats, and breath follows breath" [16]. And in another place: "Not by its own inherent energy does the earth produce its bounties, and year by year continue its motion around the sun. An unseen hand guides the planets in their circuit of the heavens. A mysterious life pervades all nature a life that sustains the unnumbered worlds throughout immensity, that lives in the insect atom which floats in the summer breeze, that wings the flight of the swallow and feeds the young ravens which cry, that brings the bud to blossom and the flower to fruit" [17].

I see three possible ways of understanding God's continual interaction with nature, any one or combination of which would fit the descriptions I just quoted. First, we might understand God's power in nature to be His continual upholding of the regularity that man calls natural law. Science has no way of proving that this regularity must exist or continue; we only observe it and depend on it. Second, we might understand God's influence beyond creation to be felt through the design of created objects, i.e., that He creates with built-in contingencies to take care of all possible future situations. Third, we might postulate that God exerts a direct influence in ways that we are not consciously aware of. He might do this either through a kind of natural process that we have never observed or He might use familiar laws in unfamiliar ways. There is good evidence that man's thought processes can be influenced (even by other men) without his being aware of it.

I can do no more than offer these possibilities for your consideration. I think it is well to keep in mind, however, that God reveals Himself as working in regular, constant, and orderly ways. Thus I think it is safe to say that God's primary interaction with nature will also be regular, constant, and dependable. Contrast this with the objects that man constructs. He may plan them to be automatic, but they inevitably require corrective supervision to make them do what they were intended, simply because man's capacity for planning ahead is so limited.

Part of God's interaction with His creation may be in the form of what man calls "miracles." This doesn't tell us much about His method, though, because we are not in a position to say whether miracles are or are not outside the regular laws of nature.

CONCLUSION

Summarizing, we can say that it is not necessary to invoke fundamental laws outside of those deciphered or potentially deciphered by physics and chemistry to explain life and mind. I come to this conclusion not because I know how to construct living matter or a mind with free will within known physical law, but because I cannot find an instance in living systems where physical law is broken and because man has such limited ability to use basic laws creatively, even, in fact, to decipher how they are used in nature. Analyzing the fundamental regularities in nature is likely to occupy the attention of some scientists into the foreseeable future. I see in living systems an even greater challenge: to learn how a few fundamental particles and interactions are used to construct systems of such great variety and complexity. While there is little hope of ever understanding nature, life, and mind completely, we can, if guided by a revelation of the central purpose in nature, at least hope to gain a greater appreciation of the God who created man in His own image.

REFERENCES

[1]Oparin, A. I. 1961. Life: its nature, origin and development. Trans. Ann Synge. Academic Press, New York, pp. 1-37.

[2]Müller, J. 1839. Elements of physiology. Trans. William Baly. 2nd edition. Taylor and Walton, London, pp. 1, 19-29.

[3]Bernard, C. 1957. An introduction to the study of experimental medicine. Dover Publications, Inc., New York, pp. 5, 31-32, 57-99, 183-184.

[4]Nagel, E. 1967. The nature and aim of science. In S. Morganbesser, ed. Philosophy of science today, pp. 3-13. Basic Books, New York.

[5]Henkin, L. 1967. Truth and provability and Completeness. In S. Morganbesser, ed. Philosophy of science today, pp. 14-35. Basic Books, New York.

[6]Jeffrey, R. C. 1967. Formal logic: its scope and limits, McGraw-Hill, New York, pp. 195-223.

[7]Wooldridge, D. E. 1963. The machinery of the brain. McGraw-Hill, New York.

[8]Ibid., p. 231.

[9]Lettvin, J. Y., Maturana, H. R., McCulloch, W. S., and Pitts, W. H. 1959. What the frog's eye tells the frog's brain. Proceedings for the Institute of Radio Engineers 47:1940-1951.

[10]Singh, J. 1966. Great ideas in information theory, language and cybernetics. Dover Publications, Inc., New York, pp. 127-144.

[11]Turing, A. M. 1964. Computing machinery and intelligence. Reprinted in A. R. Anderson, ed. Minds and machines. Prentice-Hall, Englewood Cliffs, New Jersey.

[12]Dreyfus, H. L. 1972. What computers can't do. Harper and Row, New York.

[13]Wooldridge, pp. 82-83.

[14]Olds, J. 1956. Pleasure centers in the brain. Scientific American 193 (October):105-116.

[15]White, E. G. 1952. Education. Pacific Press Publishing Association, Mountain View, California, p. 134.

[16]Ibid., p. 131.

[17]Ibid., p. 99.